Schema Learning for the Cocktail Party Problem

Woods & McDermott

Proceedings of the National Academy of Sciences, 2018

Proceedings of the National Academy of Sciences, 2018

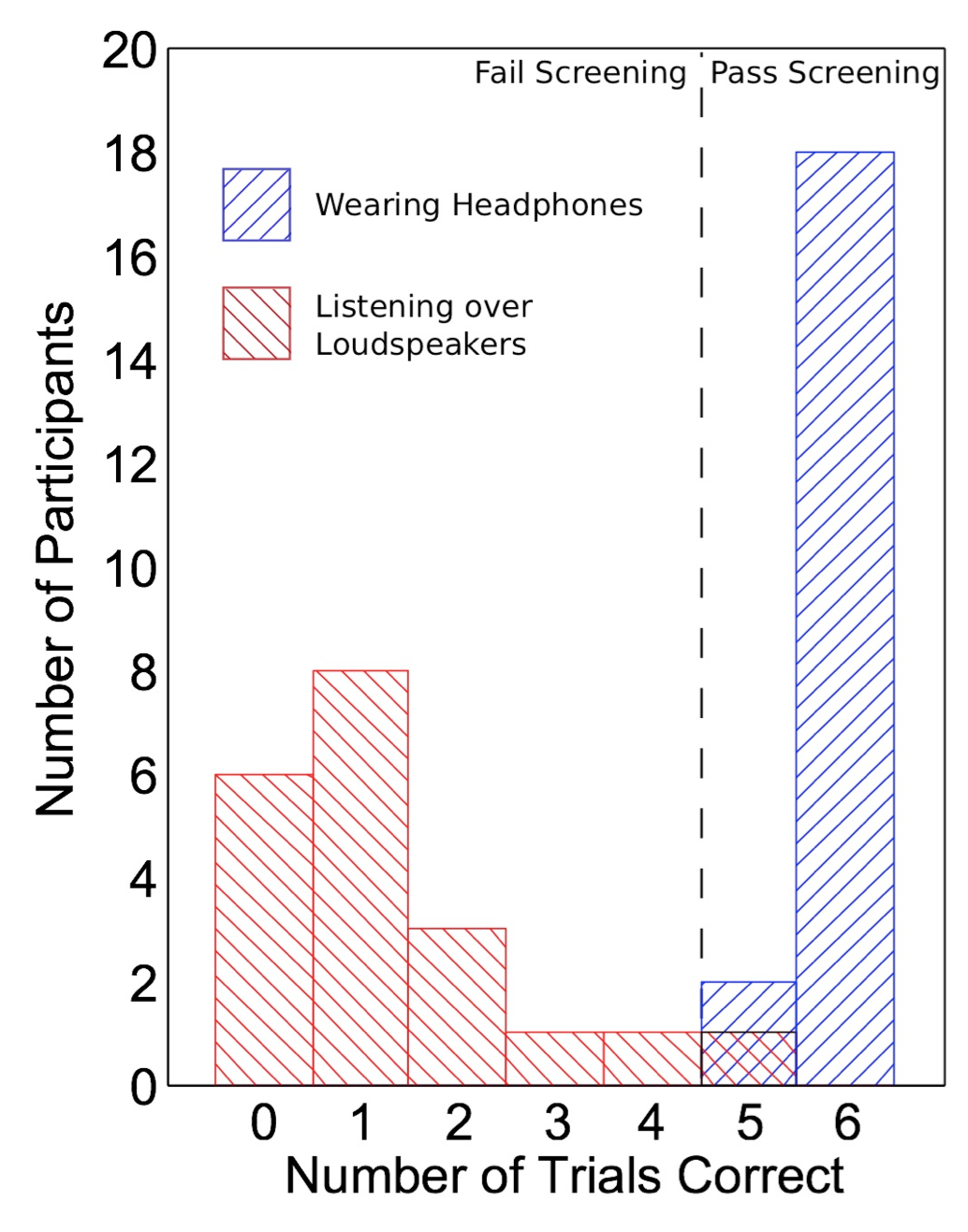

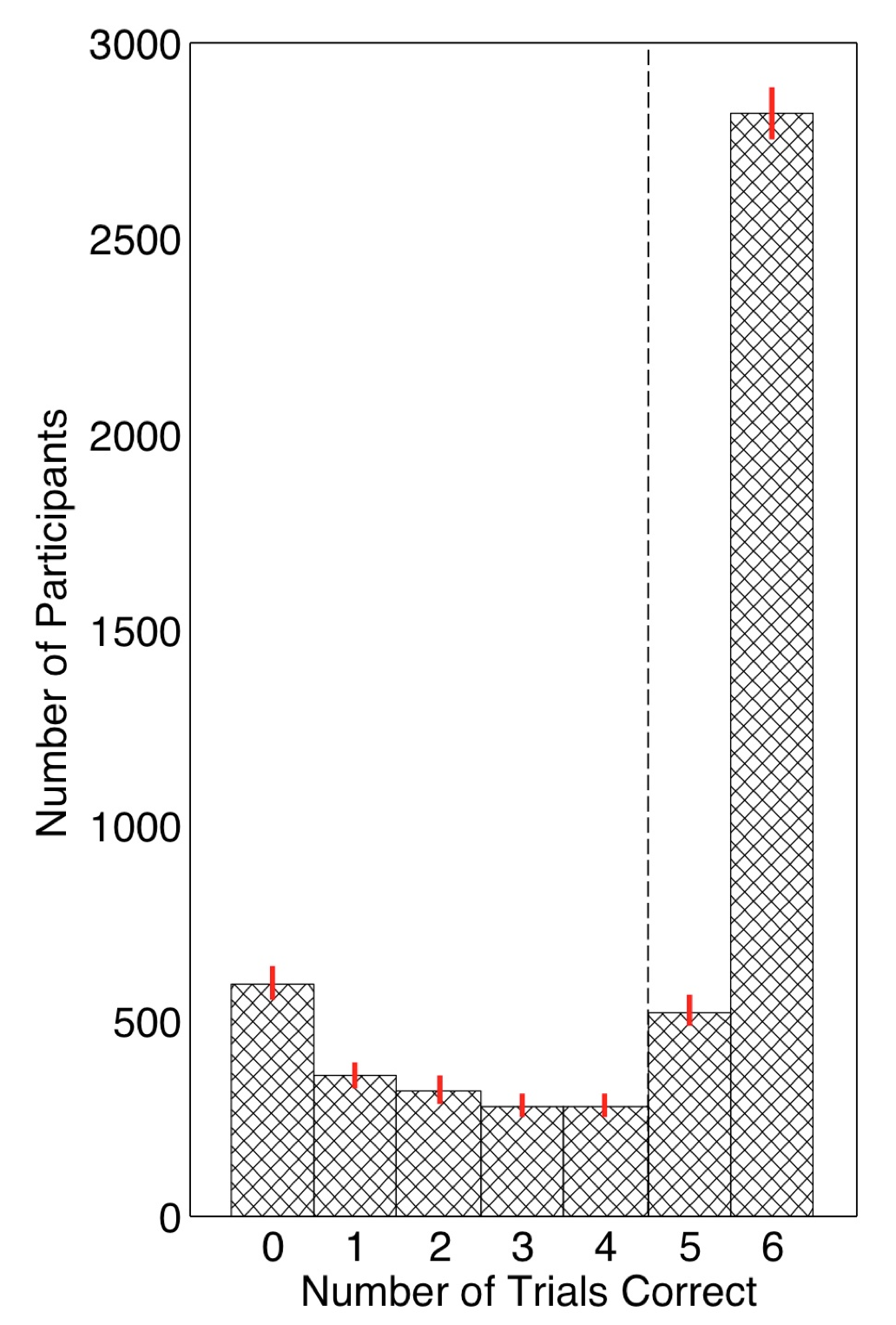

Headphone Screening to Facilitate Web-Based Auditory Experiments

Attentive Tracking of Sound Sources

Woods & McDermott

Current Biology, 2015

Current Biology, 2015

Can auditory attention actually "follow" a sound as it changes over time? (or do we end up attending to anything similar to our target?) We synthesized pairs of voices intertwined in feature space, and found that human listeners can selectively extract a particular voice from the mixture. Then we probed attention by perturbing the voices, and found that attention really moves through feature space, tracking the target sound as it changes over time.

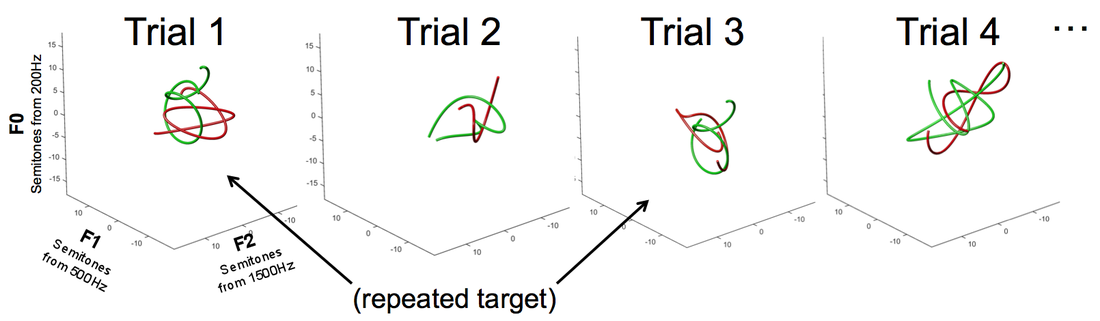

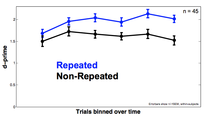

Perceptual Learning of Feature Trajectories

Sound sources in our environment often move along feature trajectories that are not random, but have some statistical structure (e.g., stereotyped prosodic patterns or animal calls). Can we use these regularities to our advantage? Our pilot results suggest that abstract feature trajectories that recur in the environment can be learned rapidly and implicitly.

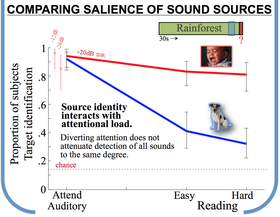

The Representation of Unattended Sounds

can be Probed with Crowdsourcing

|

Woods & McDermott

ARO 2015, Poster |

Studying unattended sounds is hard-- once you ask people to report unattended sounds, they start attending differently. One can also measure sound perception while the subject is distracted with another task, but this is indirect, and susceptible to adaptation over many trials. Instead of querying listeners repeatedly, we used crowdsourcing to run auditory 'one-shot' experiments: getting just one trial from large numbers of subjects (rather than large numbers of trials from a few subjects, as would be typical in psychophysics). We found that as people did tasks requiring more and more concentration, irrelevant sounds would increasingly go unheard. However, when listeners were distracted, some sounds were much more likely to be heard than others!

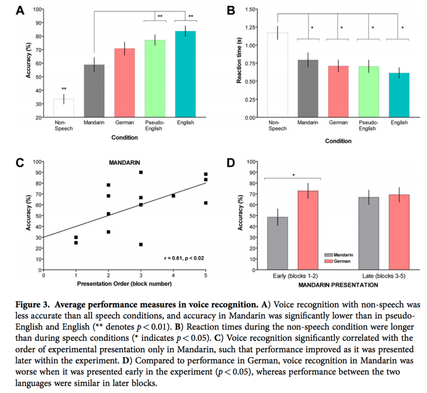

Multiple levels of linguistic and paralinguistic features contribute to voice recognition

Zarate, Tian, Woods, & Poeppel

Scientific Reports, 2015

Scientific Reports, 2015

Voice or speaker recognition is critical in a wide variety of social contexts. We investigated the contributions of acoustic, phonological, lexical, and semantic information toward voice recognition, and showed that voice recognition significantly improved as more information became available, from purely acoustic features in non-speech to additional phonological information varying in familiarity. Moreover, we found that the recognition performance transfers between training and testing in phonologically familiar contexts. These results provide evidence suggesting that bottom-up acoustic analysis and top-down influence from phonological processing collaboratively govern voice recognition.